The Rising Tide of Deepfake Scams: A Critical Threat to the Crypto Space and Beyond

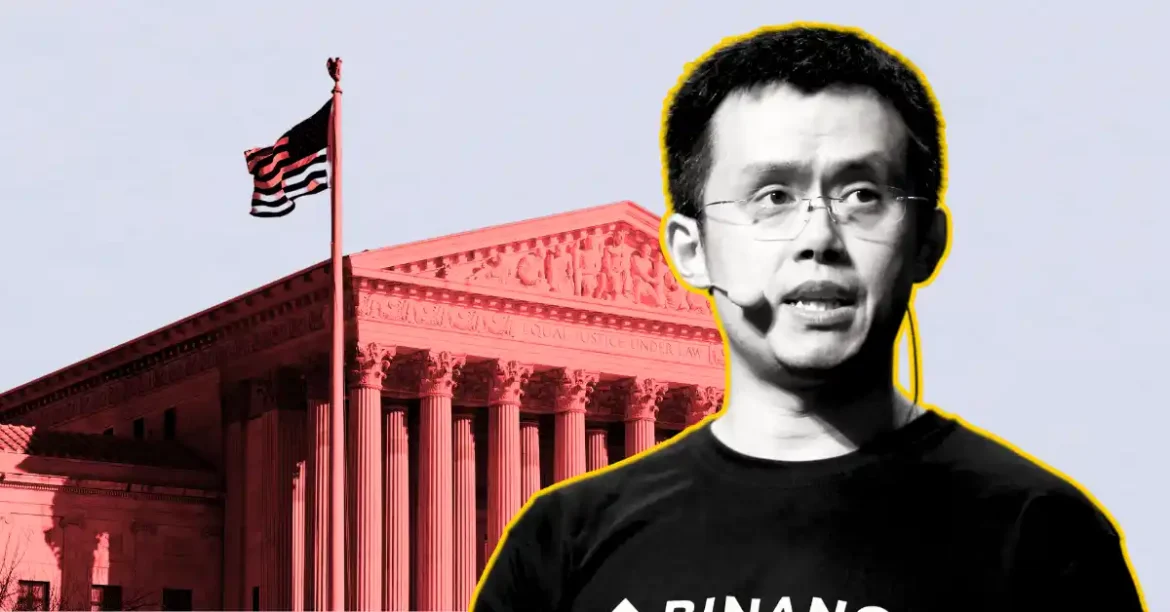

The digital landscape is undergoing a seismic shift, not due to technological advancement alone, but due to the malicious application of that advancement. Artificial intelligence, specifically the creation of increasingly sophisticated deepfakes, is rapidly emerging as a potent weapon in the hands of cybercriminals. Recent warnings from Changpeng Zhao (CZ), the founder of Binance, alongside a series of high-profile incidents, underscore the escalating threat posed by these AI-generated deceptions, not just within the cryptocurrency world, but across various sectors. The core issue isn’t simply the existence of deepfakes, but their growing realism and the erosion of trust in even traditionally secure verification methods.

The Catalyst: A Hacked Influencer and CZ’s Urgent Warning

The immediate impetus for CZ’s public alerts stemmed from the hacking of Japanese crypto influencer Mai Fujimoto’s X account. This breach wasn’t achieved through conventional means; it involved a ten-minute deepfake Zoom call with a fraudulent user. Hackers initially compromised Fujimoto’s Telegram account, leveraging this access to orchestrate the deepfake video call, ultimately installing malware and gaining control of her X account. This incident served as a stark demonstration of how easily even tech-savvy individuals can be deceived.

CZ’s response was swift and direct, emphasizing the unreliability of video call verification as a security measure. He cautioned the community against installing software from unofficial links, particularly those requested during suspicious interactions. This warning wasn’t isolated. CZ has repeatedly highlighted the dangers of AI-driven impersonation, even sharing instances of deepfake videos featuring himself promoting fraudulent cryptocurrency schemes. He’s warned that within a couple of years, distinguishing between real and AI-generated videos will become virtually impossible.

Beyond Crypto: A Widespread Vulnerability

While the crypto community is currently a prime target, the threat of deepfakes extends far beyond digital currencies. The articles reveal a pattern of deepfake attacks targeting prominent figures across diverse fields. Celebrities like Taylor Swift and Donald Trump have been subjects of AI-generated videos, raising concerns about misinformation and potential political manipulation. More alarmingly, a finance worker at a multinational firm was recently defrauded out of $25 million after being tricked by a deepfake of their company’s CFO during a video conference. Similarly, a UK energy company lost $243,000 to a scam involving a deepfake audio impersonating a CEO.

These incidents demonstrate that deepfakes aren’t confined to social media pranks or celebrity impersonations; they represent a significant financial and security risk to businesses and individuals alike. The sophistication of the technology allows criminals to convincingly mimic voices and appearances, making it increasingly difficult to discern genuine communication from fabricated deception.

The Mechanics of the Attack: From Telegram to Malware

The Fujimoto hack provides a clear illustration of the attack chain. It begins with a compromise of a less secure platform – in this case, Telegram. This initial breach provides access to sensitive information and establishes a foothold for further exploitation. The hackers then leverage this access to initiate a deepfake video call, exploiting the perceived security of visual verification.

The key lies in the seamless integration of the deepfake technology with social engineering tactics. By creating a realistic and convincing persona, the attackers gain the victim’s trust, leading them to unknowingly install malware. This malware then grants the attackers access to critical accounts and sensitive data. The articles also highlight the use of deepfake holograms, further demonstrating the evolving sophistication of these attacks.

The Growing Scale of the Problem: A 50% Increase and Beyond

The threat isn’t static; it’s rapidly escalating. Reports indicate a 50% rise in AI deepfake attacks, signaling a significant surge in malicious activity. This increase is fueled by the increasing accessibility and affordability of deepfake technology. Previously requiring specialized skills and significant resources, deepfake creation tools are now readily available, empowering a wider range of actors to engage in fraudulent schemes.

Furthermore, the articles point to a growing “cybercriminal economy” built around deepfake technology. Threat actors are actively compiling video and audio clips of individuals to create convincing impersonations, effectively turning public appearances into raw material for malicious purposes. The case of Patrick Hillman, Binance’s Chief Communications Officer, illustrates this point – his previous interviews were used to create a deepfake hologram used in attacks against crypto projects.

Regulatory Responses and the Need for Vigilance

Recognizing the severity of the threat, regulatory bodies are beginning to take action. Efforts are underway to combat deepfakes, focusing on protecting individuals and safeguarding electoral integrity. However, regulation alone isn’t sufficient. A multi-layered approach is required, encompassing technological solutions, enhanced cybersecurity awareness, and proactive risk mitigation strategies.

Coinbase’s top cyber executive emphasizes the importance of prioritizing security over convenience. This sentiment underscores the need for individuals and organizations to adopt more stringent verification procedures, even if they introduce friction into the process. Multi-factor authentication, robust password management, and a healthy dose of skepticism are essential defenses against deepfake attacks.

A Future Defined by Distrust: Navigating the Deepfake Era

The emergence of sophisticated deepfakes represents a fundamental challenge to trust in the digital age. As the technology continues to evolve, the line between reality and fabrication will become increasingly blurred. CZ’s warnings aren’t simply about protecting cryptocurrency investments; they’re about recognizing a broader societal threat.

The future demands a heightened level of digital literacy and critical thinking. We must learn to question the authenticity of everything we see and hear online, and to rely on verified sources of information. The era of unquestioning acceptance is over. The ability to discern truth from deception will be a crucial skill for navigating the increasingly complex and potentially treacherous digital landscape. The stakes are high, and the time to adapt is now.